Meta said on Thursday that it is building more safeguards to protect teen users from unwanted direct messages on Instagram and Facebook.

The move comes weeks after the WhatsApp owner said it would hide more content from teens after regulators pushed the world’s most popular social media network to protect children from harmful content on its apps.

The regulatory scrutiny increased following testimony in the United States Senate by a former Meta employee who claimed the company was aware of harassment and other harm to teens on its platforms but did not take action to address it.

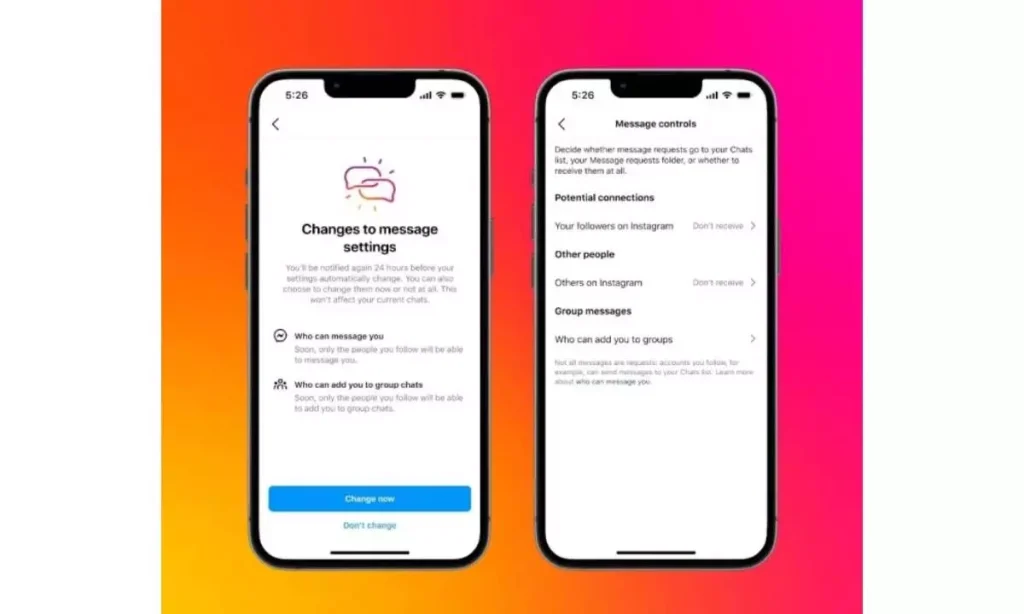

Meta said teens will no longer get direct messages from anyone they do not follow or are not connected to on Instagram by default. They will also require parental approval to change certain settings on the app.

On Messenger, accounts of users under 16 and below 18 in some countries will only receive messages from Facebook friends or people to whom they are connected through phone contacts.

Adults over the age of 19 cannot message teens who don’t follow them, Meta added.